State-of-the-Art Driving Assistance System Leverages

AI and Deep Learning to Help Respond Faster, Smarter

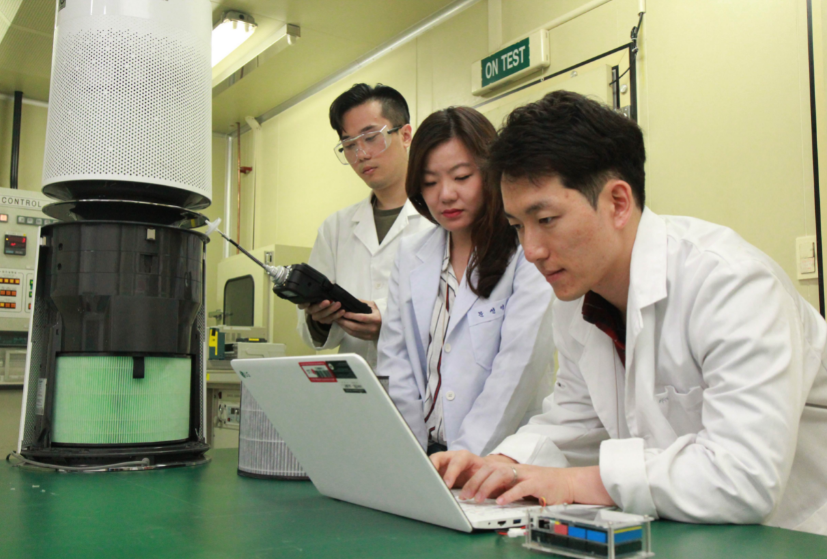

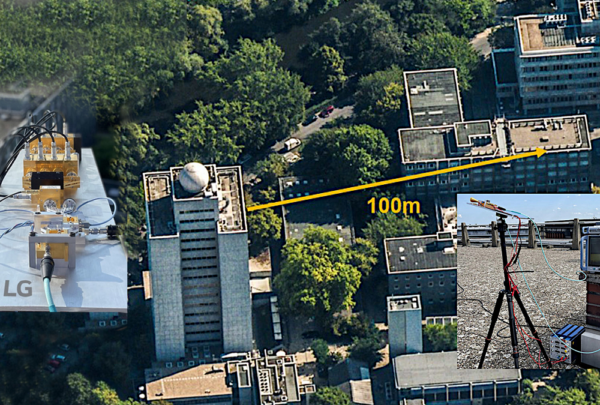

SEOUL, Oct. 6, 2021 — LG Electronics (LG) announced that its innovative Advanced Driving Assistance System (ADAS) camera module is available in the new 2021 Mercedes-Benz C-Class now rolling out in markets around the world. LG’s ADAS leverages the latest in automotive technology and works in tandem with Mercedes-Benz AG’s precise engineering to keep the driver and passengers of the new C-Class safer.

LG’s sophisticated ADAS supports a variety of essential safety-oriented functions including Automatic Emergency Braking (AEB), Lane Departure Warning (LDW), Lane Keeping Assist (LKA), Traffic Sign Recognition (TSR), Intelligent Head-Light Control (IHC) and Adaptive Cruise Control (ACC) to not only ensure the safety of the vehicle’s occupants but other motorists and pedestrians as well. A number of features supported by LG’s ADAS system such as AEB and LDW will be mandatory for new vehicles in many countries by 2022.

The LG-designed ADAS system reflects the company’s many years of leadership in mobile communications, image recognition and connectivity. Advanced AI and deep learning, both key to LG’s business strategy, enable the camera to collect and process various traffic information to help drivers respond to road and traffic conditions in real-time. The system accurately recognizes surrounding environments, constantly analyzing the vehicle’s position relative to both moving and stationary objects and automatically applies the brakes if a collision is imminent. Data from the camera also enables the system to alert drivers if they inadvertently stray into the next lane or get too close to the vehicle in front.

LG’s fast-growing Vehicle component Solutions (VS) Company counts a number of global auto brands including Mercedes-Benz, Ford and Cadillac as customers. Its joint venture LG Magna e-Powertrain manufactures e-motors, inverters, on-board chargers and e-drive systems for the EV market. The VS Company has been recognized by TÜV Rheinland, a global testing and certification organization, for product safety, with the ADAS front camera module receiving ISO 26262 Functional Safety Product and Functional Safety Process certifications.

“LG has been collaborating with Mercedes-Benz AG/Daimler AG for nearly a decade in preparation of the future of mobility and autonomous vehicles,” said Kim Jin-yong, president of LG Electronics’ Vehicle components Solutions Company. “It’s our partnerships with auto industry leaders which enable us to bring our innovations to the vehicle space and help make the world’s roads safer for everyone.”

# # #